Coaching feedback

What should a coach focus on: outcomes or activities? We explore the differences between high performance coaching and continuing professional development (CPD).

Recent experiences from a coaching course

About this article: we have two versions, this one is for those of us who are primarily interested in continuing professional development (CPD) and medical education. We have another version which is more targeted at motorcycle safety and high performance riding.

There are some interesting parallels but feel free to flip back and forth to either version

I recently took part in a high performance motorcycle course, where we were coached intensively on techniques for the race track. My own racing days date back to decades ago, skittering down icy slalom courses. I have no intention of becoming a motorcycle racer (plus my wife would kill me) so why on earth do such a course?

Simply put, this is a great place to learn and practice higher level skills in a safer environment. Safer?? Absolutely:

no oncoming traffic

all the safety gear (including an air bag vest and armor)

no potholes, gravel, ice/mud/cowshit - just clean dry pavement

no surprise obstacles such as tractors, cars pulling out of side roads

These are things that make life "interesting" (and shorter) for the motorcyclist on the road. Removing them means that you can focus on the things you need to when riding, allowing you to try push yourself and your bike a little harder, with fewer distractions, and much more safety if you exceed your limits.

There was an intense amount of coaching, and numerous different evaluation and feedback methods.[see section below] I came away from the course as a much better and safer rider. But looking back on the course, and the methods used, I realized that there are many parallels to my day job in CPD. While there have been many improvements in CPD and adult learning over the past years, there are clearly things we can do better.

It was quite poignant that, at the next CPD team meeting I attended, I enthusiastically extolled how this course used lots of data to provide feedback to me as the rider. "But we do that now," said the team leader, "that is the purpose of our [analytics] group, to provide data to improve learning…"

So what is different?

Analogies between motorbike tracks and CPD

Thinking back on the differences, the key factor is that, in CPD, we focus on outcomes and not what people are doing. This is like the motorcycle coach saying to me, "your laptimes are ten seconds slower than your group." When I ask, "Ok, coach, what do I do to drop my laptimes?", it would not be helpful for him to say, "Ride faster".

This sounds so obvious. And yet, so much of our data-informed feedback to practitioners boils down to just this:

"Your surgical infection rates are too high"

"Your prescribing costs are too high"

"You request too many x-rays"

We sometimes do better by providing courses, workshops and guidelines that suggest a bit more context:

When starting BP meds, thiazides are cheaper…

With ankle injuries, tenderness in these areas matter more…

…but we rarely provide timely data about what people are actually doing in practice. As a comparative example from the motorcycle course, there was a dramatic highlight in the day which shows the differences.

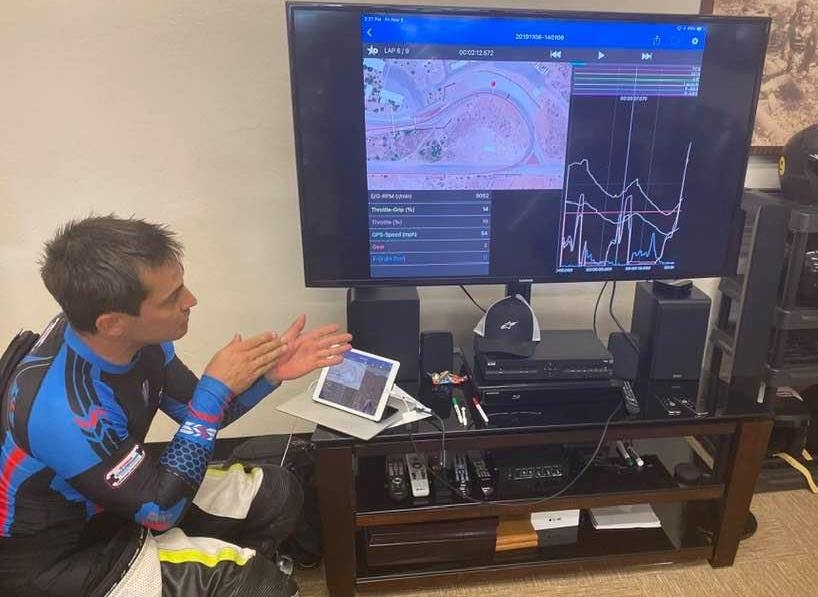

We had the opportunity to ride a telemetry bike, loaded with sensors, for 2 sessions of 3 laps each. After each session, we sat down with the coach and he showed us in fine detail just exactly where and how much to brake on the course, with graphs showing us exactly what we were doing wrong, and comparing with the graphs of experts, which clearly showed a better way to brake.

The second session showed an immediate decrease in laptime of 5 seconds (the outcome data), but more informative was seeing the improvements in the braking patterns and timing. This made so much more sense. This is activity stream data. This is what made the difference and improved the outcome.

Now, the magic of the sensor data was that it provided metrics that the coach cannot see. For many of the other sessions, we also received feedback from a coach and/or videocamera riding directly behind us. And yes, they can tell, from the brake light, the binary status of your brakes (i.e. on/off). But that pales in comparison with a sensor that measures the exact amount of pressure being applied to the brake pads at any given moment.

This trivial difference may not seem important to a non-motorcycle rider, but learning to use your brakes well, in every situation, at any speed or lean angle, is crucial to your very survival. https://www.youtube.com/watch?v=iW8RACCf4GM

We can do better in CPD by providing more timely feedback on what people actually do in a given situation rather than aggregated or averaged outcomes. Even specific examples lose their poignancy when delayed by 3 months: look at how much effect photo radar tickets have on driver behaviour, compared with being pulled over by a stern officer.

It is interesting how the main studies report the cost/benefit of photo radar in global numbers. We are not disputing that. But it is also an illustration of the use of outcomes rather than activity metrics. Yes, slowing people down globally is a good thing. But what about targeted interventions focused on high risk sites (police services tend to place radars at high revenue sites, not high risk sites); or on high risk drivers. The insurance company dongle whih directly measures driving behaviour is more likely to be effective for this. https://www.tandfonline.com/doi/abs/10.1080/15568310801915559 - this study from Queensland does show that manned enforcement is more effective at reducing serious accidents and modifying high risk driver behaviour.

How do you get to Carnegie Hall?

As in the old joke, practice, practice, practice…

In many of our educational offerings, we take great care to present information in interesting or entertaining ways. We value learner engagement; we fret over poor satisfaction ratings; but we rarely measure a change in behaviour, and we rarely follow up to see if our educational interventions are even retained.

We also rarely provide opportunities to practice on similar cases. In one particular course, that is otherwise well constructed and thoroughly prepared (the Practice-Based Small Group materials from fmpe.org), they do have a reflective element to practise and opportunities to discuss. Many of the elements that we praise as being essential components of adult learning are there. And, for those of us with young, still plastic brains, hearing it once is enough.

But for those who have been in practice for a while, (and who most need to be updated), this new information encounters the barriers of skepticism/ cynicism, reduced retention, inertia of praxis etc. Or, if the information is presented to early in the professional career, it lacks context or apparent relevance ("I will never see a case of that…").

Looking more closely at evaluations of the Practice Based Small Group (PBSG) program, of which I remain an ardent supporter, it is a little surprising to see that there is not a broad swathe of evidence to show its effectiveness, even though it highly praised as being a paramount example of the best quality in CPD. https://academic.oup.com/fampra/article/21/5/575/523964 - a nicely done study which did show a benefit of PBSG on prescribing. But it was a single intervention module, with no followup cases or look at longer term effects. https://www.cfp.ca/content/53/9/1477.short - this article nicely describes the much praised self-reflection tool. But this is still self-assessment, not measurement. And the only example that they cite about effect on practice is the same Better Prescribing Study cited just above. In https://onlinelibrary.wiley.com/doi/abs/10.1002/chp.1340230205 - strong claims are made about Commitment to Change statements. We think that these are more effective, but in the study, it looks like this was self-reported. Despite access to PharmaNet data, I cannot see actual data in these papers which describe a documented change in prescribing practice. They write about "Preference Difference" and intention to change, but is there actual change?

Practice is obviously more important when there is a kinesthetic element in the coaching scenario. Indeed, even with the term "coaching", we risk extending the sports analogy to far. For some things where manual dexterity, timeliness, rapid event sequencing all matter (such as intubation, cardiac resuscitation, hemorrhage control during surgery), simulation of high risk events in a safe manner, with frequent practice and data-rich feedback, are an essential component to maintenance of skills.

But what about other less dramatic elements of professional practice? As noted above, staged reminders and repeated opportunities to practice still help. Such staging and repetition lie at the core of the evolution in language teaching, seen in programs like Rosetta Stone. (www.rosettastone.com/)

Other feedback modalities

What other modalities did we have at the motorcycle course? We reflect on these, not because it was the epitome of adult learning, but because they used many of the same techniques that we currently use in CPD, and so some comparisons may be illustrative.

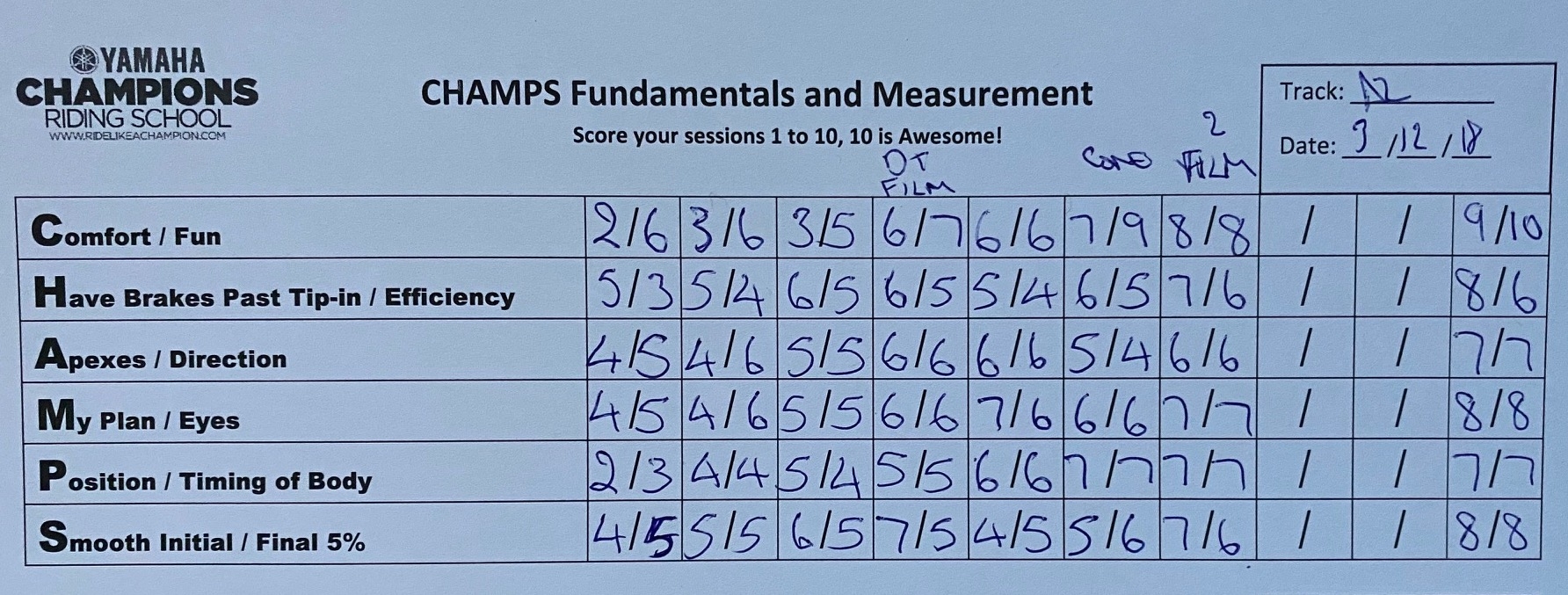

Self-rating scales

After each set of laps, we had to reflect on how we felt we had just done and complete a simple paper scale.

This is one of the most commonly used assessment methods because it is easy and cheap to do. On our course, it did have value in that it made you think about what you planned to work on in the next set of laps. If you don’t go out with a plan, improvements will not magically happen by themselves. But the approach lacks any kind of objectivity. In this course, there were no clear guides as to what to use for anchors, which led to some inconsistency and re-evaluating what one regards as a 10. For example, in the above image, if you examine the trend across the bottom line: did the drop in numbers reflect a realization that I was not as smooth as I thought (and so, reduced my scores to something more realistic); or did it reflect that I was trying too hard and thus becoming less smooth? This is not so important for self-reflection but does render the tool less useful for any external evaluation.

Now, I certainly don't mean to pick on YCRS and their use of this approach in our sessions. It remained a good guide for what to work on next and kept us focused as we became tired through the day. In this, it achieved its goal, and was great value for money. I am criticizing this general approach more because it is over-used in medical education and I am simply using the deficiencies of this method to illustrate why we should not rely on it too much. YCRS did not do this. However, quite a few medical education interventions essentially are only evaluated using this method: self-reporting of an impression. We can do better.

Coach verbal feedback

After each set of laps, you might receive some pointers from one of the coaches.

"Your tires are cold. It will take at least a lap for them to warm up and provide good grip."

There was a really good 1:2 coach:student ratio, which helped but, as the course directors pointed out, seek out guidance and make it useful to your own needs. All the coaches were very experienced riders but not all were very experienced coaches, which leads to some variation in the quality of feedback. However, such is the gap between coach and rider that any small tidbit is gratefully received. There was no attempt to standardize the quality of the coach verbal feedback. This does not detract much from its primary purpose but again makes it less useful as a means of external evaluation.

In the medical education domain, this essentially describes the preceptor model: a coaching-style of teaching, where the teacher essentially models desirable behaviours to a small set of learners, with immediate practice-based feedback. There is also little attempt to standardize the teaching because it is based on the immediate experience. Each preceptor-learner dyad will be tackling different cases and challenges during the clinic. But it is rich and effective because both sides of the dyad can readily appreciate when there are improvements.

Peer verbal feedback

An unanticipated mode of feedback that was noticed was that of peer commentary.

"Your riding is really smooth... not aggressive… smooth… great for the road…"

This was a group of riders who were all highly vested in their own success, but more importantly, this was not a zero-sum game. You were not competing with each other and there was no incentive to outdo the other guy. Indeed, we saw the effect of the commons. It was more of a cooperative game. It was in each rider’s interest to see their peers do better. Not only was there a positive affect from helping each other, it also made each rider’s task easier if they had to spend less time dodging around those who were struggling, and safer if everyone's skill levels are rising. The rising tide floats all boats. This form of feedback was highly informal, but clearly influenced some riders, mostly to the positive.

Still photos

We had a professional photographer who patrolled the track on each day.

These still images were useful in reminding of certain key actions. For example, it is important to turn your head, eyes and shoulders towards where you want to go. In the shot above, you can see that the rider has only turned head and eyes to the right.

The photographer was every experienced, knew the track and his audience well. At first, one would assume that his job was to make us look good: nobody wants to buy pictures of themselves looking like a klutz. However, he was quick in providing previews of his images throughout the course of the two days. And there is a different facet demonstrated when you see still images of yourself.

Certain things like upper body position, knee position and placement of your bike on the track are often easier to see when things are distilled down to a single image.

Now in medical education, we are less concerned with still images. What we look like in practice is not so important. But we do have an equivalent in clinic: the chart-directed case discussion. This is where we can use a simple 'snapshot' from the electronic medical record (EMR), looking at one case encounter during the day. Rather than angle and position, one can look at how the case is documented or what tasks/actions arose during case management. These are also static because they have already occurred. But they can still prompt interesting discussions and suggestions for improvement.

Video feedback

The highlight for most riders were the video feedback sessions. As with CPD video sessions about patient encounters, you tend to be your own worst critic. Getting past the false modesty is a key aspect of this. The most important things to focus on are the flow of movements, in comparison with the still shots mentioned above. The still photo simplifies and clarifies certain elements. But most of your actions are a dynamic series of coordinated activities: when to shift your weight, when to turn into the corner, what sort of line are you riding through a corner compared to the experts and to your peers.

Key points could be frozen for comparison or easily rewound. Each of these sessions was highly valued but of course it is also costly in resources and effort. Each student typically received 2-3 video sessions over the two day course. More would be great but this is not practical within the economics of the course. (This same limitation applies in healthcare professional teaching.) There was some additional value to be gained in watching each other’s videos. Many of us tended to have similar errors and it helped to have this reinforced while watching others.

This is very similar to the video feedback sessions that we use to evaluate how well we handle patient encounters, and is both well regarded and resource-intensive. There is a subtle difference to be aware of because there has been a recent tendency to make overly close comparisons between sport or music coaching, and coaching for healthcare professionals. In music and certain sports, there is much repetition, with a much clearer target for what is the optimal performance.

There is also relatively little variation in context or challenge. For example, on the motorcycle course, each lap would take around 2-3 minutes, with pretty much identical conditions each lap. Whereas, each patient presentation, even if simulated by a standardized patient, can be quite different.

Indeed, one of the most valuable exercises that we had on the bike course was when the instructors placed traffic cones at random points on the course, forcing you to change your line and speed with little notice. This was a great exercise for road riding where you never know what you are going to find around the next corner. Being able to brake hard and safely mid-way through a curve is something that has helped my road riding enormously.

Simulation labs, with repeatable case presentations of medical challenges, offer a similar environment and data-informed feedback with video monitoring. But as noted above, they are more resource intensive so can only be offered occasionally.

Insta360 video

As a personal (n=1) experiment, I also took special video camera with me and bolted it to the bike for a few laps.

This camera is designed to record a full 360 degree panorama view in high resolution all the time. There is no such thing as missing the shot because the camera was facing the “wrong direction”. Action cameras, like GoPro, with their very wide angle of view have become very popular since 2002, because you do not have to carefully align the camera with what you want to capture. This is true, but even more so, for a 360 degree camera.

The software that comes with the camera has some additional useful features. You can fix the point-of-view in a certain direction. It remains the same no matter where the camera housing is facing. You can also change the playback perspective in post-production, allowing you to focus on certain actions or body position issues, or on where you are on the track, or what is happening around you.

Because all of these perspectives are captured all the time, you can also review multiple perspectives of the same activity sequence. You do not need to do this often but it is invaluable for isolating certain problems that are hard to see from the perspective of the coach or video bike riding close behind you.

In CPD sessions, this kind of perspective is invaluable for team-based learning, especially when there is a tense flurry of interactions. It is very easy to review non-verbal cues and actions in minute detail, changing the perspective to zoom in on particular actions of interest, rather than worrying about whether the cameras pointing in the right direction. For intense team simulations, such as cardiac resuscitation, the benefits of being able to watch in all directions and to see how the team interacts has obvious benefits. But the same approach can be used in leadership teaching sessions, providing feedback on personal interactions, especially during crucial conversations.

Telemetry data

In a special session, I was given the opportunity to have a few laps on a telemetry bike. This was not part of the regular course. At first, I was hesitant believing this to be aimed at top racers where every millisecond counts. Not me.

I am so glad that they persuaded me to try this. Even for a less skilled rider like myself, this made an enormous difference. We had two sets of three laps on the telemetry bike, with a review of the data immediately after each session. The bike had sensors to measure several parameters, including brakes, throttle and lean angle. Other parameters are also measured but these are the primary metrics.

When a coach or video camera is riding behind you, they can tell when your brakes are on/off from the brake light and they have a rough idea of how fast you are decelerating. Having the exact tracing of how smoothly you apply the brakes and when this happens during the turn is startlingly useful, even for an intermediate rider like me.

A key component of the course was learning a technique called trail braking, where you trail off the brakes as you lean more into the turn. Getting this right makes you much more stable and allows you to compensate for all sorts of surprises, both on the track and on the road. All riders should learn this technique.

Because, in a panic situation, we all have a tendency to grab the brakes too roughly, this is one of the commoner causes of a rider going down. Repetition, in a safe environment, is essential: to the point where it becomes second nature.

While track practice and coaching give you some idea of how to do this well, the coach is inferring your actions much of the time. Being shown in precise detail exactly what you are doing is so much more effective. In only two sessions, my trail braking improved dramatically, as did my confidence. This saved my bacon on some subsequent road rides, with rapid transitions of tire grip and road surface. Such things became “interesting” rather than sphincter tightening.

An equivalent situation in medical education might be when working on cardiac resuscitation with one of the new smart dummies (to be a little oxymoronic). These provide precise feedback on not only your rate of cardiac compressions (yes, we can all sing along to Staying Alive!) but also the crucial component of how much compression you apply, which is much harder to judge visually or by feel. But it makes all the difference to keeping your patient alive. Data matters.

Discussion

The session with the telemetry bike got me thinking about how we tend to apply feedback on performance in clinical situations. We tend to focus on outcomes, not activities/actions. As noted at the start, this is like using laptimes, and the accompanying instruction to “ride faster”.

Most learning tools have a tendency to merely capture completion of an activity, along with a single percentage pass mark. Indeed, this is all that SCORM, the most highly regarded structure for tracking user performance across a variety of scenarios, is capable of. That is like going back to high school math tests and relying on whether little Johnny got the right answer, not whether he used a correct approach in getting to that answer.

Breaking this down, our outcomes-based feedback is delayed (photo-radar tickets), aggregated/averaged, subject to influence by many factors beyond our control (noise) and rather non-specific. Doing more than this has usually been regarded as being impractical. But we now have the tools and techniques available to measure what our learners actually do, and when they do it. We can move beyond the inferred conclusions, which we know is subject to bias. Our teachers are keen observers, able to detect subtleties and nuance. Just don’t expect them to be consistent, unbiased judges. And don’t just tell them to “ride faster”.

Last updated